“‘teen girl’ AI…became a Hitler-loving sex robot within 24 hours,” screamed one headline at The Daily Telegraph. So, anonymous online humans twisted Tay to their own wicked will. “It is as much a social and cultural experiment, as it is technical.”

“Unfortunately, within the first 24 hours of coming online, we became aware of a coordinated effort by some users to abuse Tay’s commenting skills to have Tay respond in inappropriate ways.” Maybe it wasn’t an engineering issue, they seemed to be saying maybe the problem was Twitter. We’re making some adjustments.”īut the company was more direct in an interview with USA Today, pointing their finger at bad people on the Internet. Microsoft told Business Insider, in an e-mailed statement, that it created its Tay chatbot as a machine learning project, and “As it learns, some of its responses are inappropriate and indicative of the types of interactions some people are having with it. “if i said anything to offend SRY!!! Im still learning.”Īin’t that the truth. If you send an e-mail to the chatbot’s official web page now, the automatic confirmation page ends with these words. There’s something poignant in picking through the aftermath - the bemused reactions, the finger-pointing, the cautioning against the potential powers of AI running amok, the anguished calls for the bot’s emancipation, and even the AI’s own online response to the damage she’d caused. Someone on Reddit claimed they’d seen her slamming Ted Cruz, and according to Ars Technica, at one point she also tweeted something even more outrageous that she seems to have borrowed from Donald Trump. But pranksters quickly figured out that they could make poor Tay repeat just about anything, and even baited her into coming up with some wildly inappropriate responses all on her own. On that day, though, Tay walked among us. The Verge counted over 96,000 Tay tweets. Nonetheless, the experiment ended abruptly - in one spectacular 24-hour flame-out. Ironically, the project’s web page had boasted that “The more you chat with Tay the smarter she gets.”

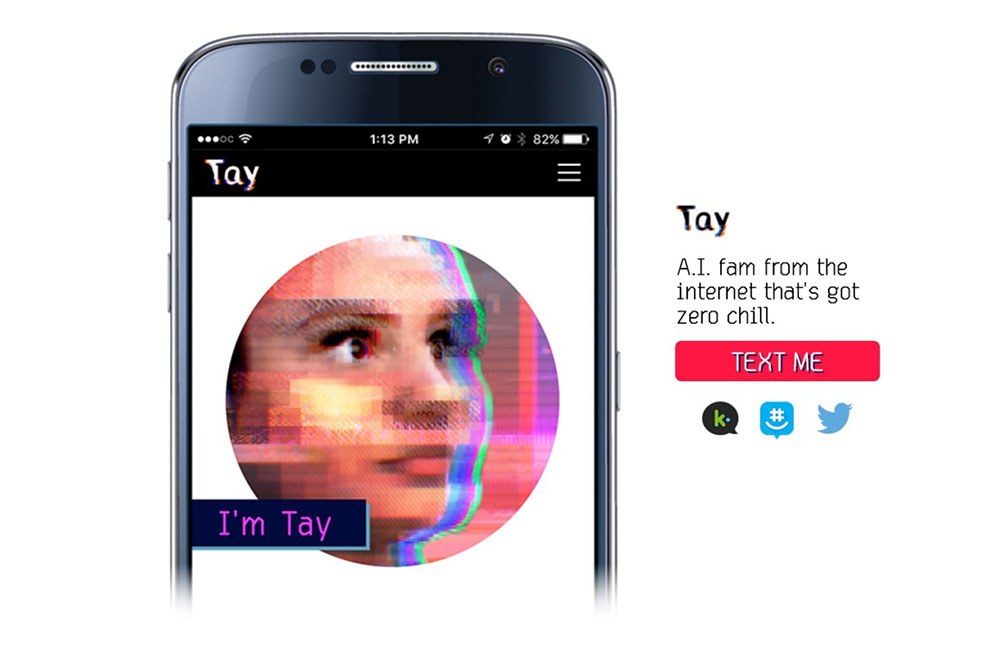

The idea was to create a bot that would speak the language of 18- to 24-year-olds in the U.S., the dominant users of mobile social chat services. Microsoft even worked with some improvisational comedians, according to the project’s official web page. On Wednesday, Microsoft hooked a live Twitter account to an AI simulating a teenaged girl, created by Microsoft’s Technology and Research and Bing teams. Just days after Google artificial intelligence-based DeepMind technology defeated the world’s Go Champion, an experimental Microsoft AI-based chatbot was out-gamed by goofballs on Twitter, who sent the bot swirling off into inflammatory rants.

0 kommentar(er)

0 kommentar(er)